We’ve covered a lot of local LLMs on It's FOSS. You can use them as coding assistants or run them on your tiny Raspberry Pi setups.

But recently, I’ve noticed many comments asking about local AI tools to interact with PDFs and documents.

Now, during my research, I stumbled upon countless AI-powered websites that promise to summarize, query, or analyze PDFs.

Some were sleek and polished but unsurprisingly, most were paid or had limited “free tier” options. And let’s be honest, when you’re uploading documents to a cloud service, there’s no real guarantee of privacy.

That’s why I’ve put together this list of open-source AI projects that let you interact with PDFs locally. These tools enable you to have your data stay on your machine, offline, and under your control.

Whether you’re summarizing long research papers, extracting key insights, or just searching for specific details, these tools will have your back.

Let’s dive in!

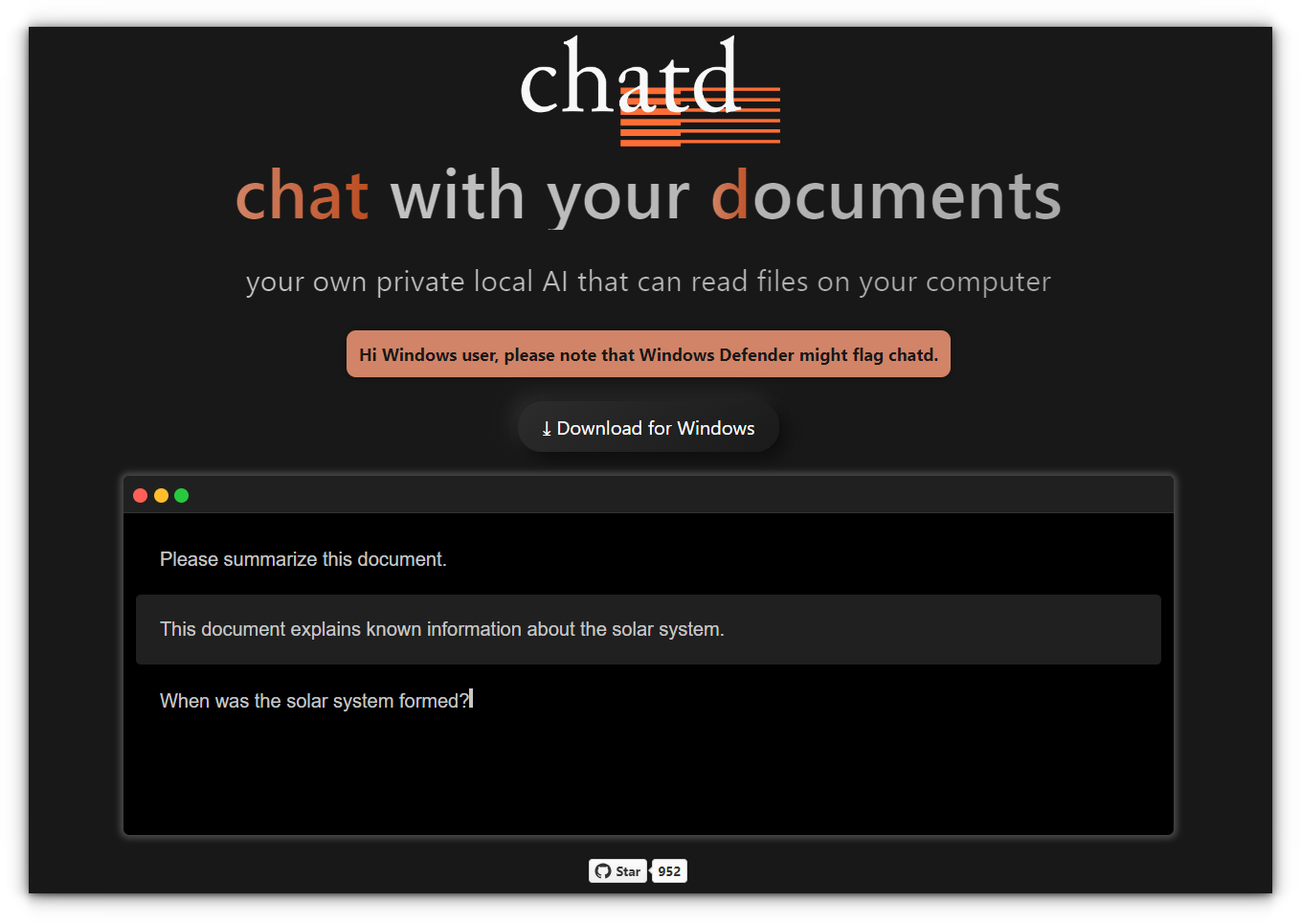

1. Chatd

chatd is a desktop application that allows you to chat with your documents locally using a large language model.

Unlike other tools, chatd comes with a built-in LLM runner, so you don’t need to install anything extra, just download, unzip, and run the executable.

Key features:

- All your data stays on your computer and is never sent to the cloud.

- Comes pre-packaged with Ollama, a local LLM server that manages the language model for you. If you already have Ollama running, chatd will automatically use it.

- Works seamlessly on Windows, macOS, and Linux.

- Advanced users can enable GPU support or select a custom LLM.

2. localGPT

LocalGPT is an open-source solution that enables you to securely interact with your documents locally.

Built for ultimate privacy, LocalGPT ensures that no data ever leaves your computer, making it a perfect fit for privacy-conscious users.

Key features:

- All processing happens on your machine, ensuring no external data leaks.

- Integrates seamlessly with popular open-source models like HF (HuggingFace), GPTQ, GGML, and GGUF.

- Uses LangChain and ChromaDB to run a fully local Retrieval-Augmented Generation (RAG) pipeline.

- Comes with two GUIs, one API-based and the other standalone using Streamlit.

- Optional session-based history to remember your previous questions.

- Supported File Formats: PDFs, TXT, CSV, DOCX, Markdown, and more. You can add custom loaders via LangChain.

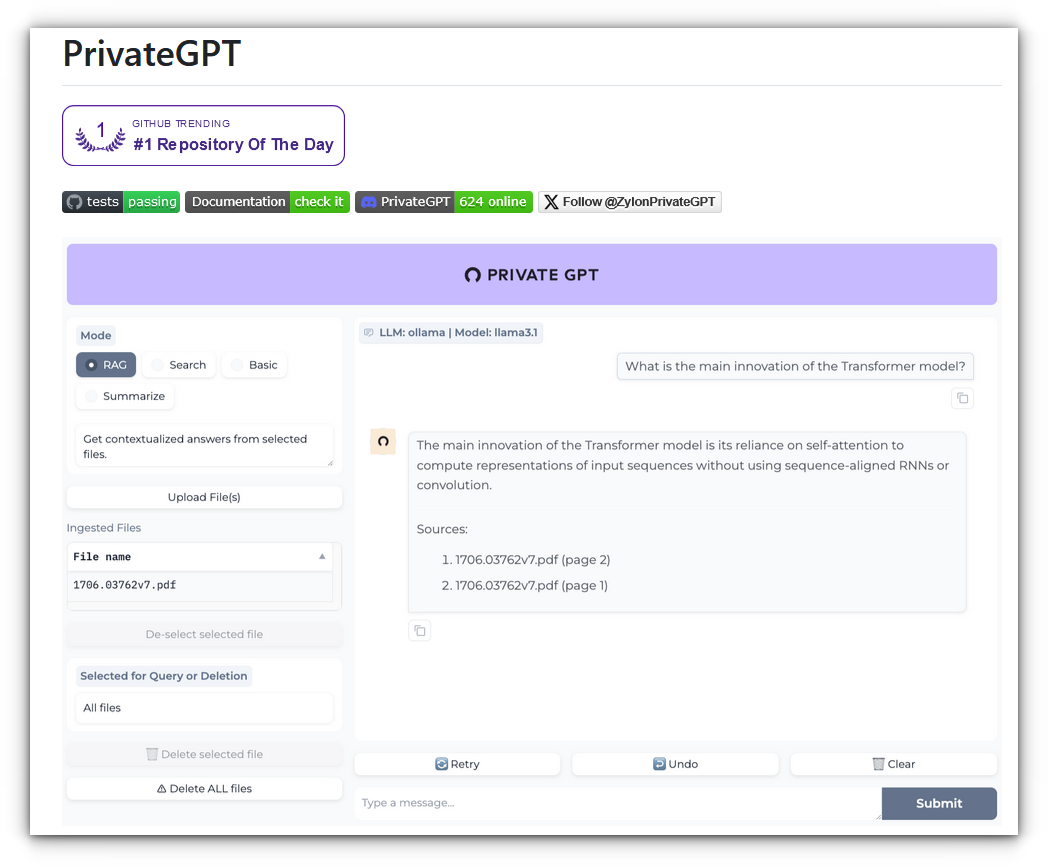

3. PrivateGPT

PrivateGPT is a production-ready, privacy-focused AI project that enables you to interact with your documents using Large Language Models (LLMs), completely offline.

No data ever leaves your local environment, making it ideal for privacy-sensitive industries like healthcare, legal, or finance.

Having personally used this project, I highly recommend it for its privacy and performance once set up.

Key features:

- 100% offline, no internet connection required.

- Built on a robust Retrieval-Augmented Generation pipeline.

- Offers OpenAI-compatible APIs for building private, context-aware AI applications.

- Includes a user-friendly interface (Gradio UI) to interact with your documents.

- Uses LlamaIndex for document ingestion and RAG pipelines and FastAPI, making it extensible and easy to integrate.

- Provides tools for advanced users to customize embedding generation and document chunk retrieval.

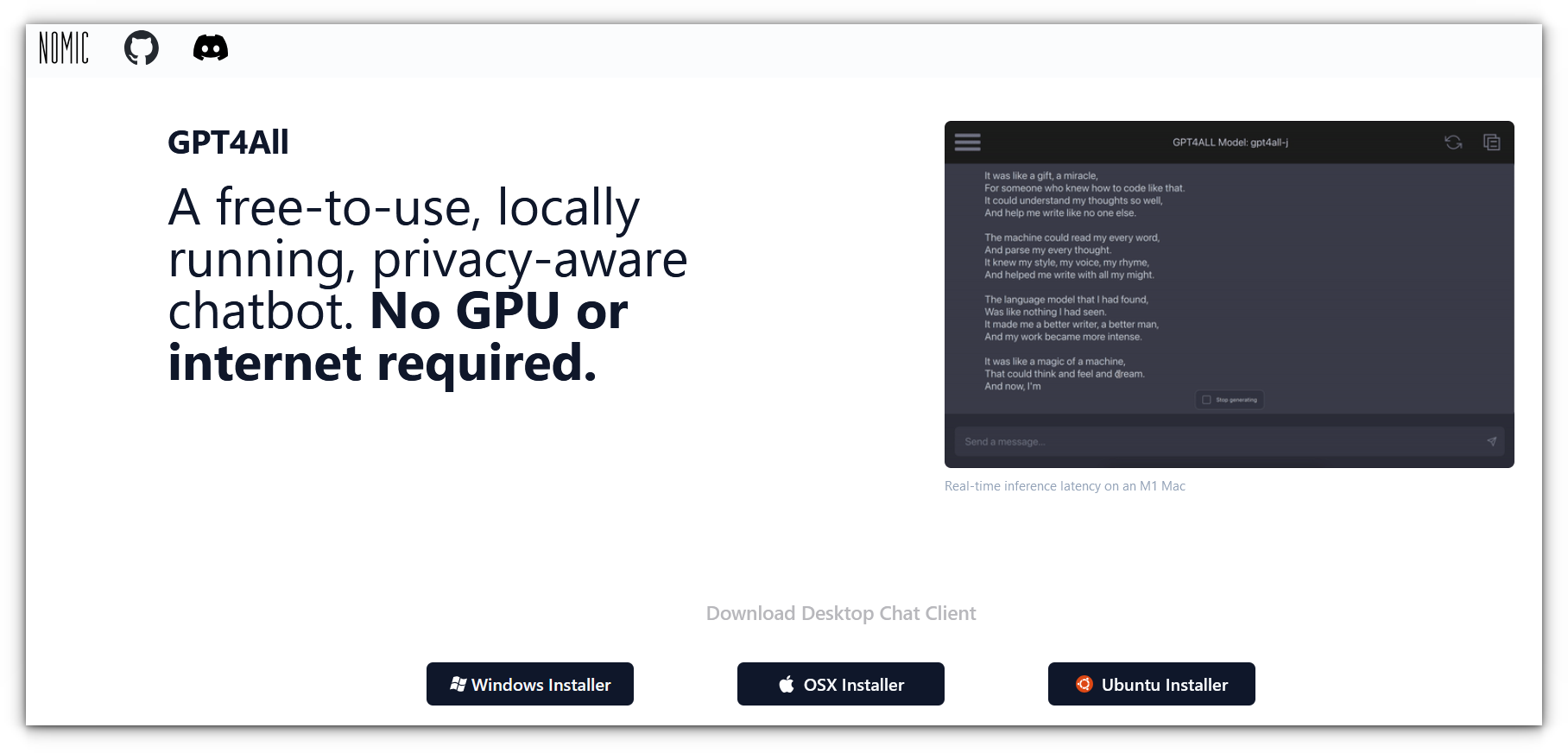

4. GPT4All

GPT4All is another open-source project that enables you to run large language models (LLMs) offline on everyday desktops or laptops, no internet, API calls, or GPUs required.

The application is designed to run smoothly on a variety of systems. It's perfect for privacy-conscious users who want local AI capabilities to interact with documents or chat seamlessly.

Key features:

- Run LLMs locally without the need for cloud-based API calls.

- Works entirely offline, ensuring privacy and control over your data.

- Download and install the application on Windows, macOS, or Linux to get started immediately.

- GPT4All offers a Python client for integrating LLMs into your own applications.

- The LocalDocs feature allows you to privately chat with your documents, offering a secure way to interact with local data.

- Can be integrate with Langchain for enhanced functionality and access to external databases such as Weaviate.

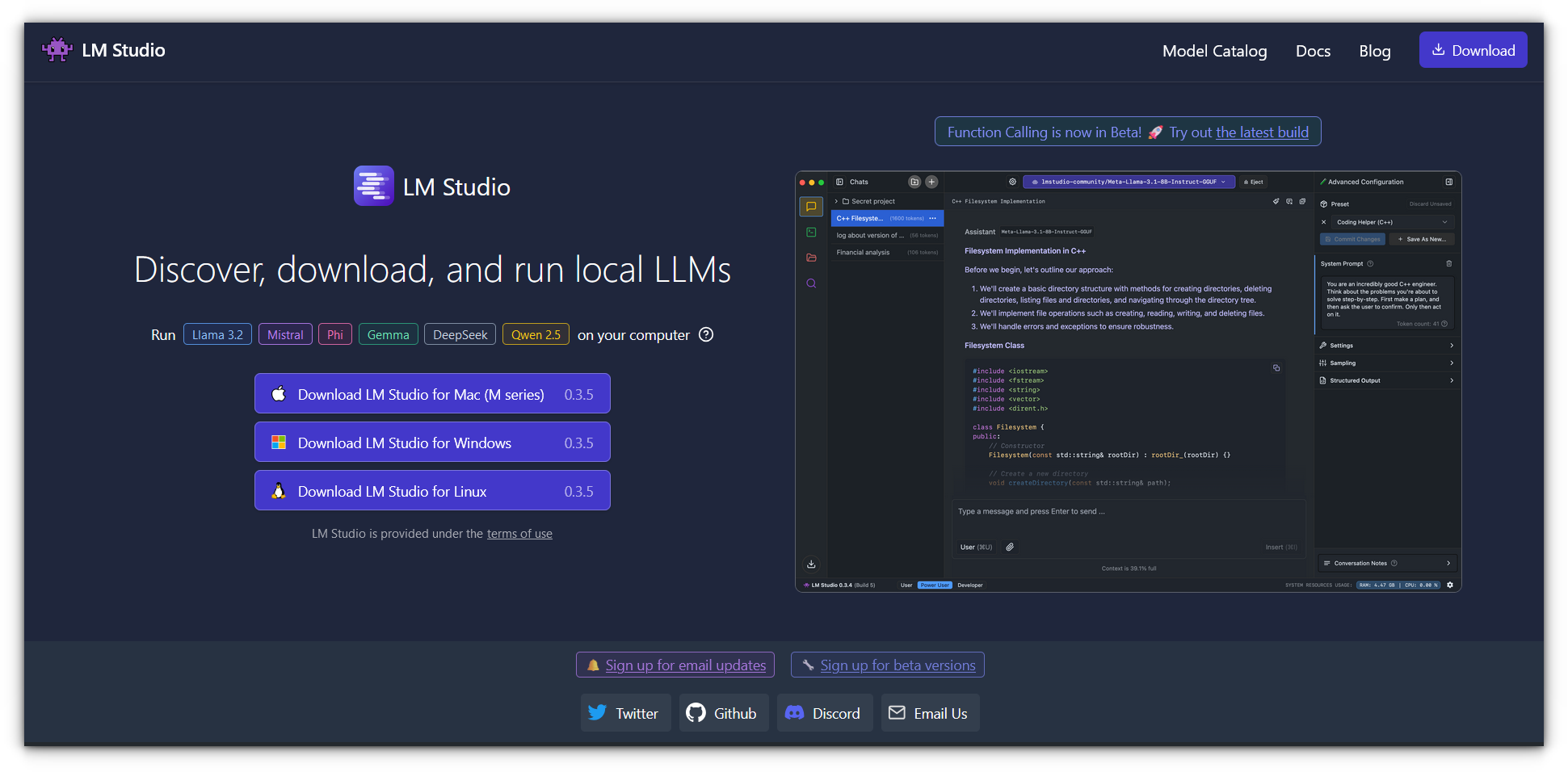

5. LM Studio (Editor's Choice ⭐)

LM Studio has become my go-to tool for daily use, and it’s easily my favorite project in this space.

With the latest release (version 0.3), it introduced the ability to chat with your documents, a beta feature that has worked exceptionally well for me so far.

Key features:

- LM Studio lets you download LLMs directly from Hugging Face using its in-app browser.

- Use a simple, user-friendly interface to chat with AI models for tasks like answering questions, generating text, or analyzing content.

- Introduced in version 0.3, you can now upload documents and interact with them locally (still in beta).

- It works as a local server, allowing seamless integration of AI models into your projects without relying on third-party services.

- On-demand model loading helps optimize system resources by loading models only when needed.

- Explore trending and noteworthy LLMs in the app’s Discover page.

- It also supports vision-enabled AI capabilities with MistralAI’s Pixtral models for advanced applications.

- Available for macOS, Windows, and Linux and Apple Silicon Macs.

Wrapping up

Personally, I use LM Studio daily. As a university student, reading through PDFs day in and day out can be quite tiresome. That's why I like to fiddle around with such projects and look for what best suits my workflow.

I started with PrivateGPT, but once I tried LM Studio, I instantly fell in love with its clean UI and the ease of downloading models.

While I’ve also experimented with Ollama paired with Open WebUI, which worked well, LM Studio has truly become my go-to tool for handling documents efficiently.

On a related note, you may interest yourself in local AI tools for image generation.

These are some of the projects I recommend for interacting with or chatting with PDF documents. However, if you know of more tools that offer similar functionality, feel free to comment below and share them with the community!